Humane

Stealth Startup Humane Hints at Future Device in New Teaser Film.

Entitled “Change Everything”, the stunning one minute video offers an enigmatic first look at what the company could be building. Directed by Ryan Staake with music by Nas featuring Kanye West & The Dream, the newly released Humane film portrays a young woman escaping a sea of lost souls enslaved to their devices (including mobile, VR headsets, and wrist worn accessories, which I’ll presume are smart watches).

She’s the only person awake to the natural world around her, looking upward at the sky rather than down at a glass slab. The protagonist leaves the crowd behind entering a lush forest as she follows the light of an eclipse. She stretches her palm towards the eclipse — which coincidentally is in the shape of Humane’s logo — and when she turns her arm back around towards her body, the eclipse logo has now been transferred and projected onto the palm of her hand. Interestingly, she is still looking upward, heads up, to the projection in her hand and the world around her, in stark contrast to the zombies she left behind, with their slumped body language, fully consumed by and engrossed in their devices.

So what clue does this hand projection in the film offer?

What if Humane’s forthcoming device was screenless with the ability to project onto any surface including your hand? If this is in fact what Humane is building, I’m here for it.

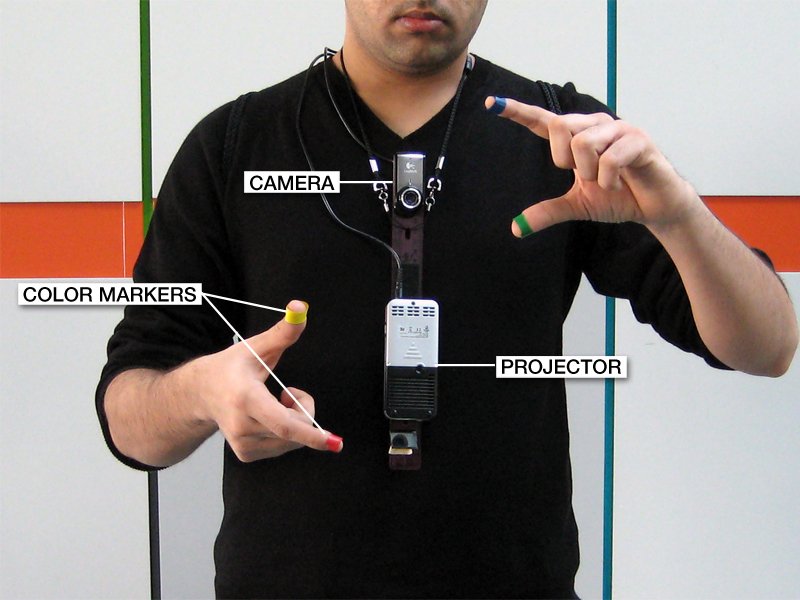

The first thing that came to mind after watching Humane’s film was SixthSense, a wearable gestural interface from 2009 by Pranav Mistry and Pattie Maes at MIT’s Fluid Interfaces Group.

SixthSense, a wearable gestural interface from 2009 by Pranav Mistry and Pattie Maes at MIT’s Fluid Interfaces Group.

SixthSense is comprised of a pocket projector, a mirror, and a camera, with the hardware components contained in a pendant-like wearable device. The projector and camera are both connected to a mobile computing device in the user's pocket. The projector displays visual information on surfaces, walls, and other physical objects, which become interfaces, and the camera uses computer-vision to recognize and track the user's hand gestures and physical objects.

Mistry writes, “‘SixthSense’ frees information from its confines by seamlessly integrating it with reality, and thus making the entire world your computer.” The system cost $350 USD to build at the time with open source instructions on how to make your own device shared here.

Looking through the Humane patent “Wearable multimedia device and cloud computing platform with laser projection system” published in 2020 (shared by 9 to 5 Google back in January), the following illustration reminded me of the SixthSense demo videos featured in each of Maes’s and Mistry’s TED talks in 2009.

Left image: Humane patent “Wearable multimedia device and cloud computing platform with laser projection system” Fig. 14A, 2020; Right image: SixthSense demo, still from Pattie Maes’s TED talk, “Meet the SixthSense interaction” 2009.

“So, we are looking for an era where computing will actually merge with the physical world. And, of course, if you don't have any surface, you can start using your palm for simple operations. Here, I'm dialing a phone number just using my hand,” said Mistry. The Humane patent similarly depicts making a phone call in this fashion: “FIG. 14A shows a laser projection of a numeric pad on the palm of a user's hand for use in dialing phone numbers and other tasks requiring number input. 3D camera 1103 (depth sensor) is used to determine the position of the user's finger on the numeric pad. The user can interact with the numeric pad using, such as dialing a telephone number.”

Pranav Mistry using the SixthSense wearable device

I was lucky enough to see Maes give a keynote on SixthSense at the IEEE International Symposium on Mixed and Augmented Reality (ISMAR) in 2009 where I was also speaking and demoing my AR work. ISMAR (and the entire industry) was largely male-dominated at the time and I was tremendously excited to hear from a pioneering woman in the field. Unfortunately, Maes’s keynote was not met with the same standing ovation she received at TED. I remember the room feeling very tense and Maes’s work being received with harsh criticism during the question period despite an excellent presentation. I honestly was baffled by the response. My friend Ori Inbar documented it here in the “Top 10 Tidbits Reshaping the AR Industry” in 2009 (see #9: “The AR ivory tower has been shaken”). It’s been well over a decade now since SixthSense was introduced and hopefully the industry and public is finally ready for a project like this. I also very much appreciate the female energy in the Humane film.

Another one of the highlights of SixthSense is the ability to take a photo while staying in the moment. “Rather than getting your camera out of your pocket, you can just do the gesture of taking a photo, and it takes a photo for you,” said Mistry.

Pranav Mistry demonstrating the ‘take a picture’ capability using SixthSense, 2009.

Humane also wants to keep you in the moment. The patent reads, “Despite the convenience of mobile device embedded cameras, there are many important moments that are not captured by these devices because the moments occur too quickly or the user simply forgets to take an image or video because they are emotionally caught up in the moment.” And on subsequent pages of the patent: “A wearable multimedia device captures multimedia data of spontaneous moments and transactions with minimal interaction by the user. The multimedia data is automatically edited and formatted on a cloud computing platform based on user preferences, and then made available to the user for replay on a variety of user playback devices.”

It’s here in the patent that I begin to think of “life-logging” and am reminded of another wearable, this time from 2015: the Narrative Clip 2. “The Narrative Clip is a tiny, automatic camera and app that gives you a searchable and shareable photographic memory,” reads Narrative’s now inactive Twitter account (Narrative filed for voluntary dissolution in September 2016, ending sales and support of their wearable device). Narrative’s Clip 2 featured an 8MP Camera and Full HD Video at 30 fps, with built-in GPS, and storage of up to 4,000 photos or 80 minutes of video. The camera clipped on to your clothing, or around a necklace, with the first version of Clip taking a photo every 30 seconds, and Clip 2 offering customizable intervals.

Humane’s wearable is “a lightweight, small form factor, battery-powered device that can be attached to a user's clothing or an object using a tension clasp, interlocking pin back, magnet or any other attachment mechanism,” as per the patent. The device is also described in the patent as being attached to a necklace or chain and being worn around the neck so the camera is facing in the wearer’s view direction. “The wearable multimedia device includes a digital image capture device (e.g., 180° FOV with optical image stabilizer (OIS)) that allows a user to spontaneously capture multimedia data (e.g., video, audio, depth data) of life events (‘moments’).”

Narrative Clip

But we can’t talk about life-logging (the process of logging or recording everything occurring in our lives) without recognizing the work of OG life-logger and engineer Gordon Bell. In 2001, Bell began “MyLifeBits” a life-logging experiment at Microsoft Research with the intent to collect a lifetime of storage on and about Bell, which served as an e-memory for recall, health, work, and even immortality. He wore a camera around his neck that automatically took a photograph every 30 seconds. In addition, Bell also captured all of his articles, lectures, memos, home movies, instant messaging transcripts, phone calls, and more. MyLifeBits attempted to fulfill Vannevar Bush's hypothetical Memex computer system (1945), storing the documents, pictures (including ones taken automatically), and sounds an individual experienced in their lifetime, to be accessed with speed and ease. The MyLifeBits software was developed by Jim Gemmell and Roger Lueder.

Legendary life-logger and engineer Gordon Bell

I had the pleasure of meeting Bell (and becoming part of his digital memory archive logged via his neck-worn camera) at the IEEE International Symposium on Technology and Social (ISTAS) Implications of Wearable Computers and Augmediated Reality in Everyday Life in 2013 where I was also speaking. Bell gave a keynote entitled, “The Experience and Joy of Becoming Immortal.” In his abstract, Bell predicted “Extreme Lifelogging” with constant image or video capture would be common place by 2020. My invited ISTAS speech, “Augmented Reality and the Emergence of ‘Point-of-Eye’ in Visual Culture” also referred to the recording of our lives through a hands-free camera as was possible with Google Glass’s photography feature at the time. We’ve since seen this capability in Snap’s Spectacles as well as Meta’s Ray-Ban Stories glasses.

Bell stopped wearing his camera in 2016. Bell's all-encompassing life-logging was very early, and in many ways, the technology didn’t exist to support a seamless experience with ease of data management and retrieval. In an interview with Computer World in 2016, Bell referred to the smartphone as a version of Bush's Memex. Reflecting on his discussion with Bell and the future of life-logging, Computer World columnist Mike Elgan wrote, “Better hardware plus artificial intelligence should do both the capturing and the retrieval of all our personal data automatically. In fact, if trends continue in their current direction, retrieval will be so automated that our virtual assistants will offer facts not only from the Internet, but also from our own experiences and memories.” I absolutely agree with this statement and wrote about these themes in my book “Augmented Human” (it’s available in 5 languages if you’d like to read it).

“Augmented Human: How Technology is Shaping the New Reality” by Dr. Helen Papagiannis. The book is available in 5 languages worldwide.

In fact, this is what appears to separate Humane and one of the reasons why the patent and proposed device is super interesting to me.

Humane’s patent reads, “The multimedia data (*context data") captured by the wireless multimedia device is uploaded to a cloud computing platform with an application ecosystem that allows the context data to be processed, edited and formatted by one or more applications (e.g., Artificial Intelligence (AI) applications) into any desired presentation format (e.g., single image, image stream, video clip, audio clip, multimedia presentation, image gallery) that can be downloaded and replayed on the wearable. For example, the cloud multimedia device and/or any other playback device computing platform can transform video data and audio data into any desired filmmaking style (e.g., documentary, lifestyle, candid, photojournalism, sport, street) specified by the user.”

And later in the patent, “Images taken by the camera and its large field-of-view (FOV) can be presented to a user in ‘contact sheets’ using an AI-powered virtual photographer running on the cloud computing platform. For example, various presentations of the image are created with different crops and treatments using machine learning (e.g., neural networks) trained with images/metadata created by expert photographers. With this feature every image taken may have multiple ‘looks’ which involve multiple image processing operations on the original image including operations informed by sensor data (e.g., depth, ambient light, accelerometer, gyro).” You can say goodbye to all that time editing your photos with crops and filters — leave it to the ‘expert photographers’ AI and get back to enjoying your life.

Not only will the Humane system be able to edit and format the data via AI, it also appears to use AI as a personal assistant to help identify critical moments and people in your life to document — unlike the Narrative Clip which automatically took photos in timed intervals — in turn, having your own personal AI to help capture and curate your life memories for easy playback. “A digital assistant is implemented on the wearable multimedia device that responds to user queries, requests and commands. For example, the wearable multimedia device worn by a parent captures moment context data for a child's soccer game, and in particular a *moment" where the child scores a goal. The user can request (e g., using a speech command) that the platform create a video clip of the goal and store it in their user account. Without any further actions by the user, the cloud computing platform identifies the correct portion of the moment context data (e.g., using face recognition, visual or audio cues) when the goal is scored, edits the moment context data into a video clip, and stores the video clip in a database associated with the user account,” describes the patent.

A still from the film “Her”, 2013, written, directed, and co-produced by Spike Jonze.

I discussed cognizant computing and personalized experiences through AI in “Augmented Human” referencing the 2013 film “Her” as well as Dr. Genevieve Bell, Vice President and Senior Fellow at Intel Corporation, and how Bell believes that we will go beyond “an interaction” with technology to entering a “trusting relationship” with our devices. Ten years from now, Bell says our devices will know us in a very different way by being intuitive about who we are.

Trust, by the way, is one of Humane’s core values.

“Humane is the next shift between humans and computing,” reads the company’s website. In an interview with Fast Company in 2019, Imran Chaudhri, Co-Founder & Chairman of Humane, said, “The next shift in technology is going to be led by AI, ML (Machine Learning), and CV (Computer Vision).” Chaudhri emphasized the impact the “Intelligence Age” will have on all of society. “I believe that AI has the ability to augment a lot of things that people are doing, actually simplify a lot of the mundane work that they have to do to achieve the things they want. You can do that through automation, you can do that through many ways, but AI definitely when done right has the potential to be the greatest gift to all of humanity. That I believe is the fundamental change that we are going to see.”

As I’ve said many times before, it’s not about augmented reality, it’s about augmenting humanity. AR and AI are no longer just about the technologies, it’s about living in the real world, and creating magical experiences that are human-centred. “Augmented Human” is about how these emerging technologies will enrich our daily lives and extend humanity in unprecedented ways.

In an interview with WIRED editor Greg Williams in 2020, Bethany Bongiorno, Co-Founder and CEO of Humane, and Chaudhri spoke to how the ultimate computing interface is one that completely disappears. “It is then that we turn back to our humanity,” said Chaudhri.

I agree with this sentiment and the premise of “calm technology,” a term coined by Mark Weiser in the 90s at Xerox PARC to describe technology as becoming more embedded and invisible, removing annoyances while keeping us more connected to what is truly important. In fact, it’s how I envision the next wave of AR and AI evolving, which I discuss in depth in “Augmented Human”: it’s not about being lost in our devices, it’s about technology receding into the background so that we can engage in human moments.

And I hope this is what Humane’s brilliant team brings to the world. ✌️

Humane’s Mission

Learn more about my consulting services here.

Book me as a speaker for your next event.

Join me on social. Twitter: @ARstories Instagram: @ARstories